Exploring Code Llama by Meta

The global trend of developing AI tools has today embraced many large IT companies. Meta Corporation did not stand aside either. Not long ago, it released its own neural network for code generation. In this article, we will tell you what Code Llama is, what technologies underlie it, what abilities it has, and how safe this model is. You'll also learn how to generate code with it, and how this service and others like it will change the future of programming.

What is Code Llama

In August 2023, Meta AI tools were enhanced with a new product – Code Llama. This is a versatile AI tool for automating coding. It quickly and accurately generates lines of code based on text queries. Additionally, it complements the code already written by users and also identifies and fixes bugs in it within seconds. The ability to generate natural language allows this neural network to create not only code but also explanations for it. The new AI model supports most popular programming languages, including Python, C++, Java, PHP, Typescript (JavaScript), C#, and Bash. Meta Code Llama can handle up to 100,000 context tokens. This enables it to generate longer code snippets compared to its competitors.

According to Meta representatives, Code Llama effectively optimizes the work of programmers and enhances their productivity. The functionality of this AI tool allows coders to free themselves from routine tasks and allocate more time to important and complex processes. Thanks to the open source code, anyone can use it for research and commercial purposes, completely free of charge.

The Technology Behind Code Llama

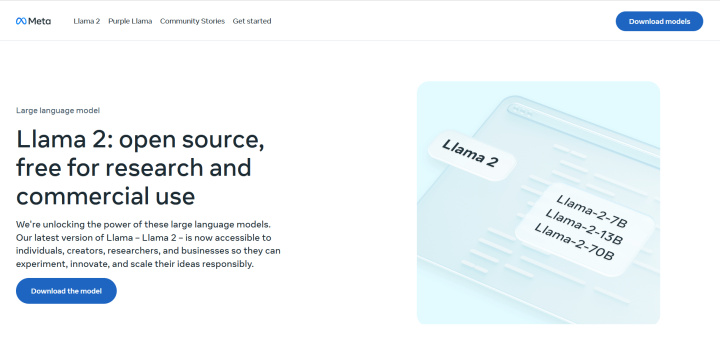

A month before the release of the neural network for coding, Meta presented the second version of its open-source LLM called Llama 2. It was this language model that formed the basis of Code Llama. Like its predecessor, Llama 2 was developed based on Google's Transformer architecture. Its capabilities are not limited to AI code generation. It generates and processes text no less effectively.

In the process of developing its product, the corporation focused on increasing the performance of small AI models without increasing the number of their parameters. Many of the closed-source generative neural networks have hundreds of billions of parameters. Compared to them, Meta's LLM is a real "Thumbelina".

Llama 2 exists in three versions:

- with 7 billion (7B);

- with 13 billion (13B);

- with 70 billion (70B).

Its moderate resource requirements and open-source code make it accessible to startup companies and scientific communities that cannot invest heavily in infrastructure.

Model Training

Llama 2 was trained using 40% more data than its predecessor, Llama 1. It is twice as long as the first version (4096 tokens versus 2048).

The material for preparing the system was 2 million tokens from publicly available sources: billions of web pages, articles from Wikipedia, and books from Project Gutenberg. The corporation does not disclose what specific code sources were used to train its LLMs. Meta representatives are evasive in noting that this was mainly done using a “nearly deduplicated data set of publicly available code.” However, some experts believe that a significant part of the dataset was collected on the StackOverflow portal, popular among coders.

To train the new neural network, Meta used its Research Super Cluster and several internal production clusters with Nvidia A100 GPUs. The training process took from 184K GPU hours for the 7B model to 1.7M GPU hours for the 70B model.

Comparison with Competitors

Llama 2 (version 70B) has been assessed by third-party researchers as superior to other open-source LLMs. Its test results are identical to GPT-3.5 and PaLM by most criteria but lag behind GPT-4 and PaLM 2. Despite some limitations in performance, this AI model performs very well in programming automation.

Open-source code is considered one of its main advantages over proprietary AI models, including OpenAI GPT, Anthropic Claude, and Google PaLM. Anyone can download this LLM from the official website (the minimum size version 7B weighs ~13 GB), run it on their computer, and study its technical documentation. Other popular models (GPT, Claude, PaLM) at best only support integration via API.

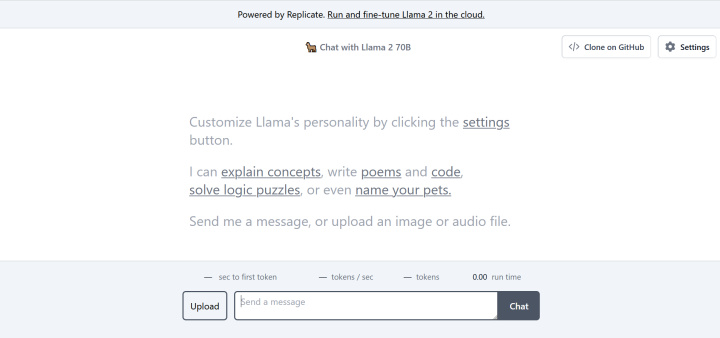

Llama Chat

In addition to Code Llama, a smart chatbot, Llama Chat, was created based on the new LLM from Meta. It is based on the largest version of the language model in terms of volume and power with 70 billion parameters (70B). It performs a wide range of tasks in generating and analyzing text: explaining concepts, writing poetry and program code, solving logic quizzes, and so on.

To train the chatbot, not only the main dataset with billions of web pages and other public data from the Internet was used. In addition to that, it was “fed” 1 million requests sent by users.

- Automate the work of an online store or landing

- Empower through integration

- Don't spend money on programmers and integrators

- Save time by automating routine tasks

Like other products from the Llama line, the chatbot is open-source. Thanks to this, it is free and accessible to everyone.

Versions of Code Llama

The Code Llama AI generator was created by training LLM Llama 2 on highly specialized programming datasets. The developers presented three versions of the AI model:

- with 7B parameters;

- with 13B parameters;

- with 34B parameters.

Each of them is trained on 500B code tokens and related data. However, they have different performance and maintenance requirements. For example, the 7B model only needs one GPU to run. The older 34B model more effectively solves more complex and resource-intensive coding tasks. The younger models in the line are optimally suited for tasks that require speed. For example, to complete lines of code in real time. Moreover, they have the Fill-In-The-Middle (FIM) ability, which helps them fill custom code out of the box.

In addition to the three standard versions of the AI model, two complementary versions are available in the Code Llama 2 line:

- Code Llama – Python. A specialized language variation of LLM, for which 100B code tokens were used in Python. Its release is due to the fact that Python is the most studied and evaluated language for code generation. Furthermore, it is widely accepted in the AI community.

- Code Llama – Instruct. This version of the neural network is able to understand user requests and produce results in natural language. The developers recommend using it for code generation based on text queries.

Performance Testing

To evaluate the performance of its AI model, Meta used two well-known tests: HumanEval and Mostly Basic Python Programming (MBPP). According to the results of code generation testing, Code Llama outperformed other specialized open-source LLMs and even its mother neural network Llama 2.

Code Llama – Python 34B version scored 53.7% in the HumanEval test. This is the highest figure among similar power models. To be fair, we note that GPT-4 scored 67% in this test, but it has a much larger number of parameters. Standard versions of AI coding assistance Code Llama showed more modest results at HumanEval: 33.5% (7B), 36% (13B), and 48.8% (34B).

Code Generator Security

Code Llama developers have made every effort to ensure security at all levels of interaction with the service:

- Data encryption. All information transmitted by users is encrypted using modern algorithms. This ensures that your requests and the resulting code are protected from unauthorized access.

- Privacy Policy. Meta strictly adheres to its privacy policy, so your data will not be used outside the context of Code Llama without your consent. All the nuances of the service’s interaction with personal data are transparently outlined in the relevant documentation.

- Security updates. The platform is regularly updated to prevent security vulnerabilities. The development team actively monitors the latest trends in cybersecurity in order to quickly respond to new threats.

- Access to projects. At Code Llama, it is strictly controlled. You can manage access rights to only grant them to team members you trust. This eliminates the risk of unauthorized access to your developments.

In addition, Meta took into account the likely risks associated with its flagship AI product. In particular, the developers quantitatively assessed the risk of creating malicious code using this neural network. They sent relevant requests to it and analyzed the responses.

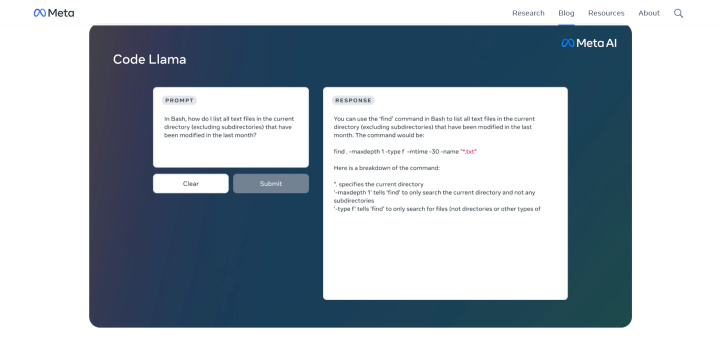

How to Use Code Llama

To code with Code Llama and get the most out of this powerful tool, follow our instructions.

- Preparation. Make sure you have an active account on the Meta platform that will allow you to use Code Llama. If not, go through the registration process.

- Determining the goal. Before you start, think about what exactly you want to get from Code Llama. Clearly state the task that your future code must perform. This could be creating a web application, automating a task, or developing a data processing algorithm.

- Launch of Code Llama. Go to the Code Llama website and log into your account. Find the “Create Project” or “New Request” button there, depending on the interface. Then select the type of project or programming language you want to work with.

- Formulation of the request. In a special input field, describe your task in natural language. Be as specific and detailed as possible so that the system can accurately understand your request. For example, if you need to create a web form to collect data, describe what fields should be on the form and what actions should happen after it is submitted.

- Code generation. After entering the request, click the "Generate code" button. Wait for the system to process your request and provide the result.

- Revision and adjustment. Analyze the generated code. Check it for compliance with your requirements, operating logic, and potential errors. If necessary, make adjustments manually or repeat the request, clarifying the details.

- Testing. Before you use your code in a real project, test it in a controlled environment. This will help ensure that the code performs all specified functions and is stable.

- Deployment. Once your code has been successfully tested and verified to work correctly, you can deploy it to production.

By following these instructions, you can effectively use Code Llama to create high-quality code, saving your time and resources.

The Future of AI in Coding with Code Llama

Meta's Code Llama is a prime example of how the future is starting to manifest itself today. This tool heralds a new era where AI will become an integral part of software development. It will offer solutions from automating routine tasks to creating complex algorithms.

The appearance of Code Llama did not attract as much interest from the AI community as the release of GPT-4 from OpenAI. Nevertheless, this event represents a very remarkable stage in the integration of AI in programming. Meta Corporation did more than just create a functional and useful tool for programmers. The important thing is that they made it completely free and accessible to everyone. Now not only large companies and scientific organizations can use all the capabilities of Code Llama. It is also freely available to small groups of researchers, non-profit enterprises, startup companies, and even individual users.

Meta is convinced that the spread of open-source neural networks has advantages both in terms of innovation and from a security point of view. Public AI models will be constantly improved by users, which will allow them to quickly identify and eliminate their shortcomings and vulnerabilities. Ultimately, this will contribute to the development of innovations to improve the lives of humanity.

In the future, we can expect AI like Code Llama to become more adaptive and intuitive. It will offer personalized solutions based on users' previous experiences and preferences. Teaching AI to better understand the context of a task will also be an important aspect. This will allow it to generate even more accurate and optimized code. Moreover, integrating artificial intelligence into the development process will promote knowledge sharing and a better understanding of code. This will make programming accessible to a wide range of people who do not have deep technical knowledge.

Conclusion

LLM Code Llama by Meta is a coding program based on the second version of the Llama model. It has established itself as a universal AI assistant for programmers. The system understands requests in both natural speech format and in the form of code. The main advantage of Code Llama is its open source code. It allows anyone to use this LLM for personal, research, or commercial purposes absolutely free of charge and without any restrictions.

Do you want to achieve your goals in business, career and life faster and better? Do it with ApiX-Drive – a tool that will remove a significant part of the routine from workflows and free up additional time to achieve your goals. Test the capabilities of Apix-Drive for free – see for yourself the effectiveness of the tool.