Designing ETL Processes for Data Warehousing

Designing efficient ETL (Extract, Transform, Load) processes is crucial for the success of any data warehousing project. ETL serves as the backbone, ensuring data is accurately extracted from source systems, transformed into a usable format, and loaded into the data warehouse. This article explores best practices, tools, and strategies to optimize ETL workflows for robust and scalable data warehousing solutions.

Introduction

Designing ETL (Extract, Transform, Load) processes for data warehousing is a critical task that ensures data is accurately and efficiently transferred from various sources to a centralized repository. ETL processes help in transforming raw data into meaningful insights, supporting decision-making and strategic planning.

- Extract: Gathering data from multiple sources, such as databases, APIs, and flat files.

- Transform: Converting the extracted data into a suitable format or structure for analysis.

- Load: Inserting the transformed data into the data warehouse for storage and future retrieval.

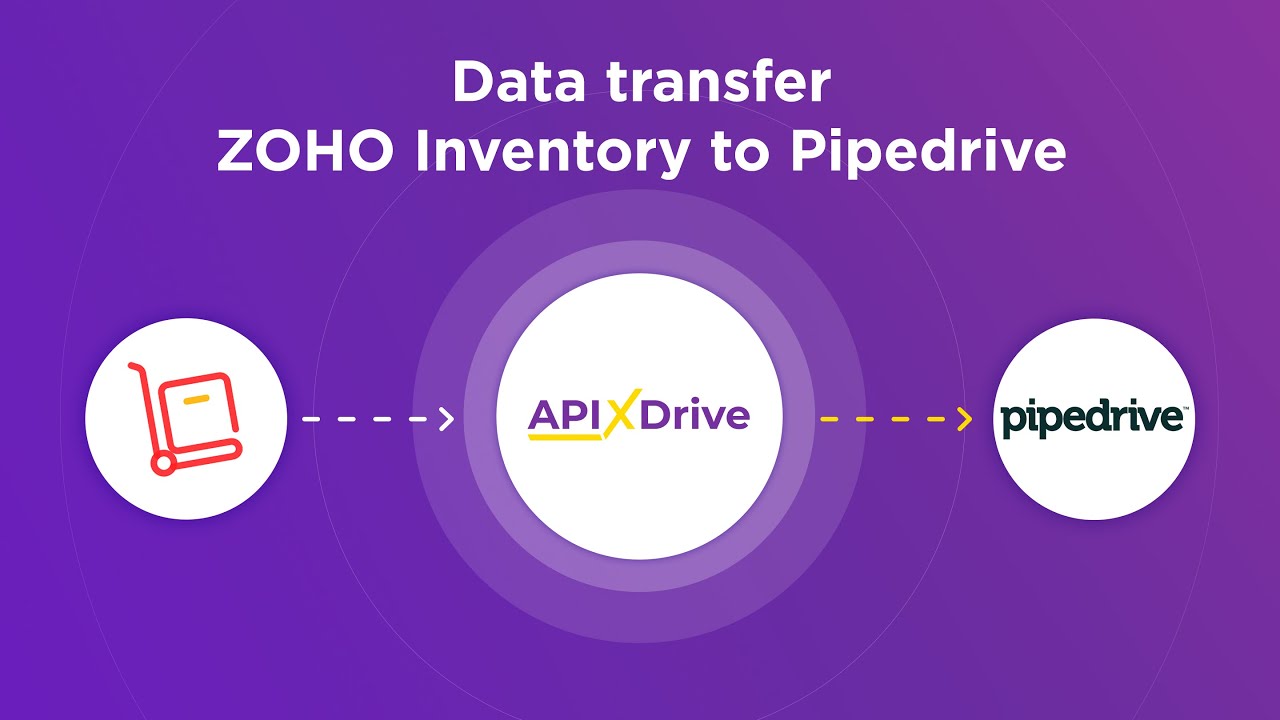

Modern ETL tools and services, like ApiX-Drive, streamline the integration process by automating data extraction and transformation from numerous applications and platforms. These tools reduce manual effort, minimize errors, and ensure timely data availability, making them indispensable for efficient data warehousing. By leveraging such services, organizations can focus more on data analysis and less on the technical complexities of data integration.

Data Extraction

Data extraction is the first and crucial step in the ETL (Extract, Transform, Load) process, where raw data is gathered from various sources for further processing. This step involves identifying the data sources, which can include databases, cloud storage, APIs, and flat files, among others. The goal is to ensure that the data collected is comprehensive and accurate, forming a solid foundation for subsequent transformations and loading into the data warehouse. It is essential to use robust extraction methods to handle different data formats and ensure data integrity during this phase.

Modern tools and services, such as ApiX-Drive, can significantly streamline the data extraction process. ApiX-Drive offers seamless integrations with a wide range of data sources, enabling automated data extraction without the need for extensive coding. By leveraging such tools, organizations can reduce the time and effort required to set up and maintain data pipelines. Additionally, these services often come with built-in features for error handling and data validation, ensuring that only high-quality data is extracted and ready for transformation. This automation not only enhances efficiency but also improves the reliability of the entire ETL process.

Data Transformation

Data transformation is a critical step in the ETL process, ensuring that raw data is converted into a format suitable for analysis and reporting. This involves cleansing, structuring, and enriching the data to meet the specific requirements of the data warehouse and the business needs.

- Data Cleansing: Remove duplicates, correct errors, and handle missing values to ensure data quality.

- Data Structuring: Organize the data into predefined schemas and formats that align with the data warehouse architecture.

- Data Enrichment: Enhance the data by integrating additional information from external sources, such as demographic data or industry benchmarks.

Tools like ApiX-Drive can streamline the data transformation process by automating integrations and data flows between various sources and destinations. By using such services, organizations can reduce manual efforts, minimize errors, and ensure that their data is always up-to-date and ready for analysis. This not only saves time but also enhances the overall efficiency and accuracy of the data transformation process.

Data Loading

The data loading phase in ETL (Extract, Transform, Load) processes is crucial for moving transformed data into a data warehouse. This phase ensures that the data is accurately and efficiently loaded, maintaining its integrity and consistency. Proper planning and execution of the data loading process can significantly impact the overall performance of the data warehouse.

During the data loading phase, it is essential to consider factors such as data volume, load frequency, and the method of loading. Different strategies can be employed depending on whether the data load is a full load or an incremental load. Full loads involve loading entire datasets, while incremental loads only update new or changed data.

- Batch Loading: Suitable for large volumes of data, where data is loaded in chunks at scheduled intervals.

- Real-Time Loading: Ideal for scenarios requiring immediate data availability, where data is loaded as soon as it is received.

- Incremental Loading: Efficient for updating only the changes since the last load, reducing load times and resource usage.

Tools like ApiX-Drive can facilitate the data loading process by automating integrations and ensuring seamless data transfer. By leveraging such services, organizations can streamline their ETL workflows, minimize errors, and enhance the reliability of their data warehouse systems.

Evaluation and Monitoring

Effective evaluation and monitoring of ETL processes are crucial for ensuring data quality and system performance in data warehousing. Regularly scheduled evaluations help identify bottlenecks, data inconsistencies, and performance issues. Implementing comprehensive logging and error-handling mechanisms can provide valuable insights into the ETL flow, allowing for timely detection and resolution of issues. Utilizing dashboards and automated reports can further streamline the monitoring process, offering real-time visibility into the health of ETL pipelines.

For enhanced integration and monitoring, services like ApiX-Drive can be invaluable. ApiX-Drive facilitates seamless data integration across various platforms, ensuring that data flows smoothly between systems. By leveraging its capabilities, organizations can automate data synchronization, reducing manual intervention and errors. Additionally, ApiX-Drive's monitoring tools can alert administrators to potential issues, enabling proactive management and maintenance of ETL processes. This holistic approach ensures that data warehousing solutions remain robust, efficient, and reliable.

FAQ

What is an ETL process in data warehousing?

Why is data transformation important in ETL?

How can I automate my ETL processes?

What are the common challenges in designing ETL processes?

How do I ensure data quality in my ETL process?

Strive to take your business to the next level, achieve your goals faster and more efficiently? Apix-Drive is your reliable assistant for these tasks. An online service and application connector will help you automate key business processes and get rid of the routine. You and your employees will free up time for important core tasks. Try Apix-Drive features for free to see the effectiveness of the online connector for yourself.