ETL Data Mining

ETL (Extract, Transform, Load) data mining is a crucial process in modern data management, enabling organizations to gather, refine, and utilize vast amounts of data efficiently. By extracting data from various sources, transforming it into a suitable format, and loading it into a data warehouse, ETL facilitates insightful analysis and decision-making, driving business intelligence and operational success.

Introduction

ETL (Extract, Transform, Load) processes are essential for effective data mining, enabling organizations to gather, refine, and utilize data from various sources. These processes ensure that data is clean, accurate, and ready for analysis, thus driving informed decision-making and strategic planning.

- Extract: Data is collected from multiple sources including databases, APIs, and flat files.

- Transform: The collected data is cleaned, normalized, and converted into a suitable format.

- Load: The transformed data is then loaded into a data warehouse or another storage system for analysis.

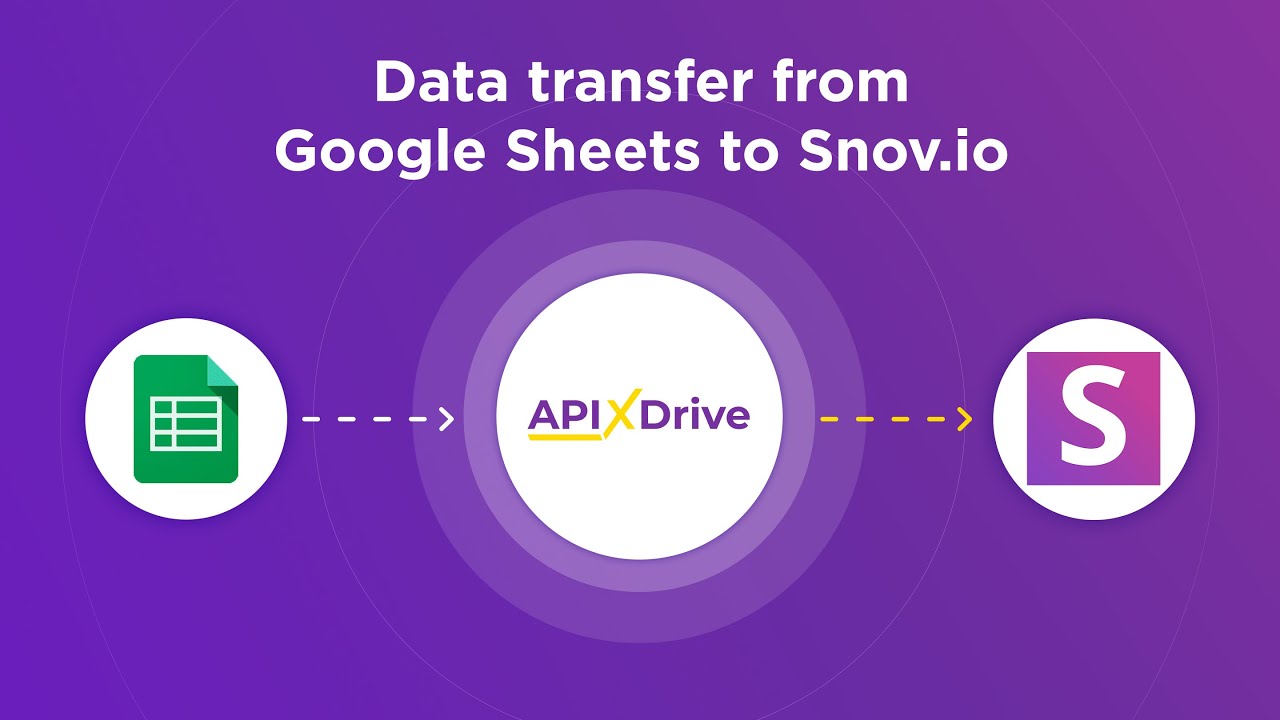

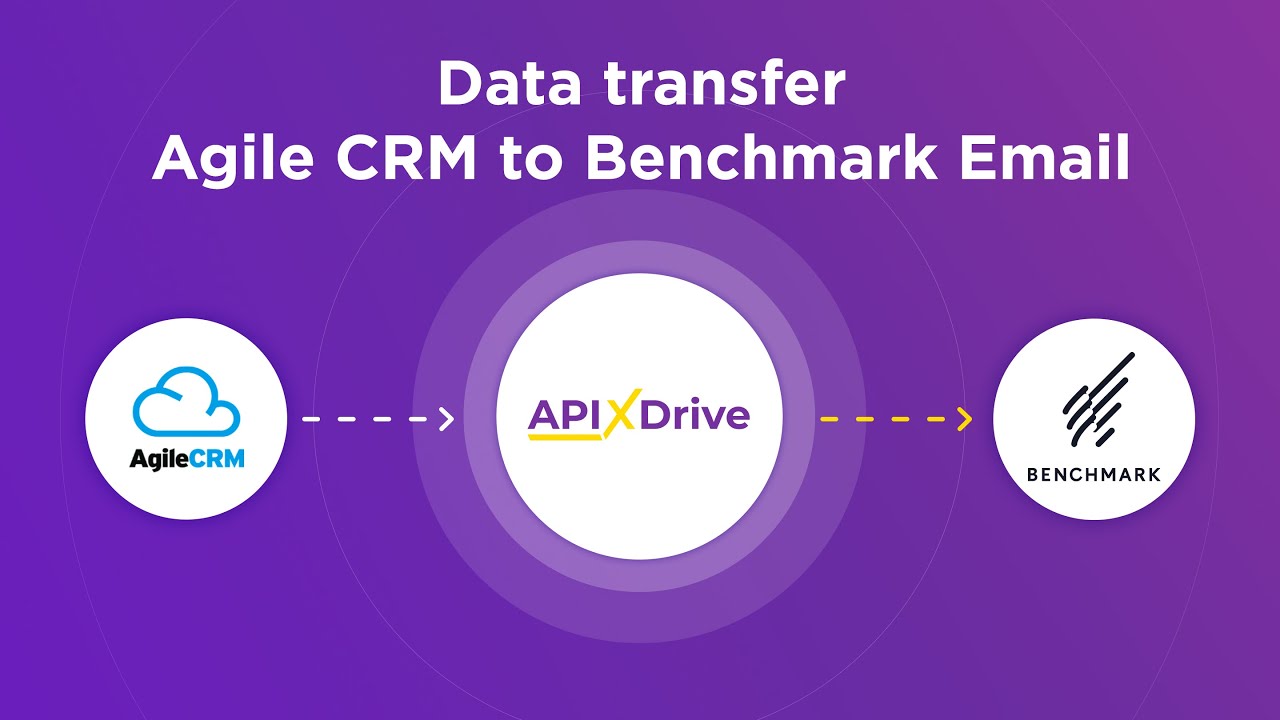

Integrating diverse data sources can be challenging, but tools like ApiX-Drive simplify the process by automating data extraction and transformation. ApiX-Drive supports a wide range of integrations, making it easier for businesses to connect their data ecosystems seamlessly. Utilizing such services enhances the efficiency and reliability of ETL workflows, ultimately empowering data-driven insights and actions.

ETL Process

The ETL process, which stands for Extract, Transform, Load, is a fundamental aspect of data mining. During the extraction phase, data is collected from various sources such as databases, APIs, and flat files. This phase ensures that all relevant data is gathered, regardless of its format or origin. Tools like ApiX-Drive can streamline this process by automating data collection from multiple APIs, thereby reducing the complexity and time required for manual extraction.

Once the data is extracted, it enters the transformation phase where it is cleaned, normalized, and formatted to meet specific requirements. This step often involves data validation, deduplication, and enrichment to ensure the data's accuracy and consistency. Finally, in the loading phase, the transformed data is loaded into a target data warehouse or database for further analysis. Efficient ETL processes are crucial for accurate data mining, enabling businesses to derive meaningful insights and make data-driven decisions.

Data Mining Techniques

Data mining techniques are essential for extracting valuable insights from large datasets. These techniques help in identifying patterns, correlations, and trends that are not immediately apparent. Various methods are employed to achieve this, each with its own strengths and applications.

- Classification: This technique categorizes data into predefined classes. It is commonly used in spam detection, credit scoring, and medical diagnosis.

- Clustering: Clustering groups similar data points together based on their characteristics. It is useful in market segmentation, image analysis, and customer profiling.

- Association Rule Learning: This method identifies relationships between variables in large databases. It is widely used in market basket analysis and recommendation systems.

- Regression: Regression analysis predicts a continuous value based on the relationship between variables. It is often used in forecasting and risk management.

- Neural Networks: These are modeled after the human brain and are used for complex pattern recognition tasks such as image and speech recognition.

Integrating these techniques into your data processing workflow can significantly enhance decision-making capabilities. Tools like ApiX-Drive can facilitate the seamless integration of data mining processes by automating data transfer and ensuring that your datasets are always up-to-date.

Applications and Case Studies

ETL (Extract, Transform, Load) processes are essential in modern data mining applications, enabling organizations to efficiently manage and analyze large datasets. One prominent application is in the field of business intelligence, where ETL pipelines are used to gather data from various sources, transform it into a consistent format, and load it into data warehouses for analysis.

In healthcare, ETL processes facilitate the integration of patient data from multiple systems, enhancing the accuracy of medical records and enabling advanced analytics for better patient care. Similarly, in finance, ETL is crucial for aggregating transactional data from different platforms, ensuring compliance, and performing real-time fraud detection.

- Business Intelligence: Enhancing decision-making through consolidated data.

- Healthcare: Integrating patient data for improved medical outcomes.

- Finance: Ensuring compliance and real-time fraud detection.

ApiX-Drive is a powerful tool that simplifies the setup of ETL integrations, enabling seamless data transfer between various applications and services. This platform supports a wide range of connectors, making it easier for businesses to automate their ETL workflows without extensive technical expertise. By leveraging such tools, organizations can focus on deriving insights rather than managing data pipelines.

Conclusion

In conclusion, ETL Data Mining plays a crucial role in transforming raw data into valuable insights, enabling businesses to make informed decisions. The ETL process, which stands for Extract, Transform, Load, is essential for cleaning, transforming, and loading data from various sources into a centralized data warehouse. This process ensures that data is accurate, consistent, and ready for analysis, ultimately leading to better business outcomes.

Moreover, integrating ETL processes with modern data integration services like ApiX-Drive can significantly enhance efficiency and automation. ApiX-Drive offers seamless integration capabilities, allowing businesses to connect multiple data sources effortlessly. By leveraging such services, organizations can streamline their ETL workflows, reduce manual intervention, and focus more on data analysis and strategic decision-making. Thus, embracing ETL Data Mining and advanced integration tools is key to unlocking the full potential of data in today's competitive landscape.

FAQ

What is ETL in Data Mining?

Why is ETL important for Data Mining?

How can I automate the ETL process?

What are common challenges in ETL processes?

How do I ensure data quality in the ETL process?

Routine tasks take a lot of time from employees? Do they burn out, do not have enough working day for the main duties and important things? Do you understand that the only way out of this situation in modern realities is automation? Try Apix-Drive for free and make sure that the online connector in 5 minutes of setting up integration will remove a significant part of the routine from your life and free up time for you and your employees.